By Team BuzzBizzAI

21 Jan, 2026

AI has made marketing faster, cheaper, and occasionally weird in ways that feel like a brand guideline being fed through a paper shredder. It has also created a new trust problem: audiences can no longer tell what is real, what is synthetic, and what is simply aggressively optimized.

That is why “ethical AI marketing” is quickly becoming the need of the hour. It is trust that should become the algorithm we follow first.

Disclosure talks, everything else walks

Regulators are pushing toward transparency, especially when people are interacting with AI or consuming synthetic content.

Image Source- Freepik

In the EU, the AI Act includes transparency obligations that require users to be informed when they are interacting with an AI system in many cases, and it includes requirements around labeling or marking synthetic content like deepfakes. Some countries are moving even faster. Spain, for example, has pursued rules with significant penalties for failing to properly label AI-generated content. In the UK, the ASA has advised advertisers to disclose AI use when it features prominently and is unlikely to be obvious to consumers.

In the US, the FTC’s truth-in-advertising principles still apply, including requirements around endorsements and material connections, which matters when AI starts showing up as a “person” who appears to recommend something.

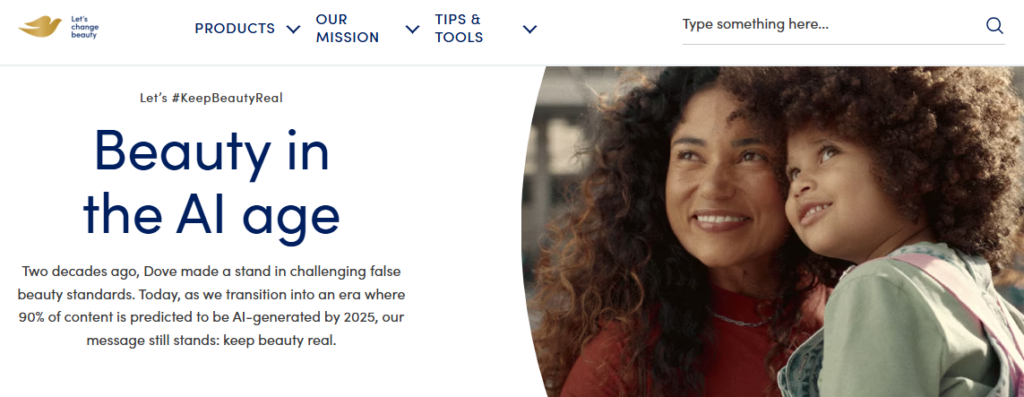

Dove chooses a line in the sand

Dove’s “Keep Beauty Real” pledge is a clear ethical position: it says it will not use AI to create or distort women’s images in its advertising. This is not just brand purpose theater. It is also a practical trust strategy in a world where synthetic beauty can be generated on demand, in infinite variations, with all the realism of a high-end render.

Image Source- Dove

The lesson: sometimes the most ethical AI strategy is refusing a use case that would undermine your brand’s credibility, even if it would be cheaper and faster.

Coca-Cola shows what happens when craft becomes the controversy

Coca-Cola’s AI holiday advertising has sparked criticism for visual inconsistencies and a general sense that the work looked off, even when a large team was involved in producing and curating massive volumes of generated clips. This is not just an aesthetic debate. It is a trust debate. When viewers feel a brand is cutting corners, “innovation” starts to sound like “we found a way to not pay for the expensive part.”

The lesson: transparency without quality does not buy trust. If the output feels low-effort, disclosure can become a spotlight rather than a shield.

Valentino and the luxury trap

Luxury brands operate on the idea that someone cared. When AI-heavy visuals become the headline, that premise gets tested. Valentino faced backlash for an AI-driven campaign that many viewers found uncomfortable or cheap, even with disclosure. The reaction points to a specific risk: in categories built on craft, AI can read as cost-cutting, not creativity.

The lesson: in premium storytelling, the ethical question is not only “did you disclose it,” but also “did you still earn the right to ask for attention and money.”

Transparency tech is coming for your content

A growing counterweight to synthetic confusion is content provenance. The C2PA standard and Content Credentials aim to provide a verifiable record of how content was created and edited, which can help audiences and platforms distinguish between authentic and synthetic media. For brands, this is heading toward a future where “trust marks” become as normal as accessibility labels.

What responsible brands do in 2026

If you want a practical ethical playbook, it looks like this:

Disclose when it matters: If AI use is not obvious and could affect consumer interpretation, label it clearly, especially for synthetic people, voices, and altered reality.

Ground assistants in real knowledge: Most “AI mistakes” are actually knowledge management failures. Garbage FAQ, garbage answers.

Audit for bias and harm: If your model changes tone or outcomes by demographic or language, it is not personalization. It is a liability.

Use provenance where possible: Start experimenting with Content Credentials for high-risk assets like spokesperson content, claims-heavy visuals, and anything that could be mistaken for real footage.

Do not outsource judgment: AI can generate, summarize, and optimize. It cannot be trusted to understand brand ethics, context, or consequences.

The uncomfortable truth is that AI makes it easy to produce marketing. It does not make it easy to produce marketing people trust. That part still requires humans, plus a little humility, plus a disclosure label that does not look like it was added by a lawyer who hates fonts.

No Comment! Be the first one.